Generative AI has rapidly become an integral part of how we work. From AI pair programmers that suggest code to smart assistants that draft emails, employees across industries are embracing new AI tools to boost productivity. A striking example comes from Coinbase, where the CEO issued a strict mandate that engineers start using AI coding tools – even firing those who failed to onboard within a week. His goal? To have 50% of the company’s code generated by AI by the end of the quarter.

This anecdote highlights the seriousness with which organizations are approaching AI adoption. But beyond high-profile cases, many workplaces are grappling with a common question: How are our employees utilizing AI tools, and how do we effectively track their use?

We'll explore Cursor AI as a prime example of an AI tool in the workplace, and discuss how to track its usage among employees. Cursor AI is a popular AI-driven coding assistant, but the lessons here apply broadly to AI tools across roles. We’ll cover why employees use such tools, why organizations should monitor usage, what metrics to look at, and how to do it in a way that’s both effective and employee-friendly. By the end, you’ll see how a solution like Worklytics can provide the insights needed to maximize the value of AI in your organization.

To help organizations measure how the tool is used, Cursor includes an Analytics Dashboard for team admins. This built-in dashboard transforms the nebulous concept of “AI coding assistant usage” into concrete numbers and charts. What can you track via Cursor’s native analytics? Key metrics include:

See the big picture with aggregate stats like total prompts (called “tabs” in Cursor) and total AI requests over a period of time. This illustrates the extent to which your team relies on AI. For example, hundreds of AI code completions in a single week signal strong output driven by Cursor.

Instead of singling out individuals, Cursor provides averages. You can track how many AI tabs a typical developer opens or accepts, and how many lines of AI-generated code make it into their work each day. These numbers reveal how deeply Cursor is embedded in day-to-day workflows without exposing anyone’s personal usage.

Cursor also tracks adoption breadth with weekly and monthly active user counts. If half your licensed developers are not touching the tool, that is a red flag. On the flip side, a steady rise in active users shows momentum. Leadership can set adoption goals, such as “90% of engineers use Cursor weekly,” and track progress directly in the dashboard.

This metric measures trust. Cursor logs how many AI suggestions are shown vs. how many developers actually accept. If 100 suggestions lead to 30 accepted lines of code, that is a 30% acceptance rate. A healthy rate means developers are finding the AI helpful but still selective, while a low rate could indicate the model needs fine-tuning or users need more guidance.

For advanced plans, Cursor analytics show which features your team leans on most. Maybe the chat-based assistant is heavily used, while automated bug-fixing lags behind. This breakdown helps you understand where AI is making the biggest impact, and where adoption efforts might be needed to unlock underutilized features.

All these metrics are accessible in Cursor’s team dashboard in near real-time. Team admins can filter by time ranges (e.g. last 7 days, last 30 days) to spot trends.

Through Cursor’s Admin API, one can programmatically retrieve daily metrics on code edits, AI assistance usage, and user spending.

Cursor’s built-in analytics give valuable insights into how developers interact with the tool. But when it comes to understanding AI adoption across the entire organization, there are some important gaps. Here are three big limitations to keep in mind:

Developers rarely use only one AI tool. While Cursor may be the official coding assistant, other employees might lean on ChatGPT Enterprise, Notion AI, or GitHub Copilot. Each platform might have its own stats, but they live in silos.

This fragmented view makes it difficult for leaders to see the bigger picture. For example, you might see strong Cursor usage in engineering, but have no visibility into how other departments are engaging with AI. Without a unified lens across tools, executives risk missing organization-wide trends, adoption patterns, and best practices.

Cursor reports on activity inside the IDE, but it does not explain the business impact. You can see an acceptance rate climb from 25% to 35%, which looks promising, but does that improvement translate into faster release cycles, fewer bugs, or happier employees?

Answering those questions requires connecting Cursor data with broader business metrics like sprint velocity, defect counts, or delivery timelines. The cursor does not perform that correlation automatically. As a result, many organizations either export the data manually and combine it with other sources or rely on external analytics platforms that integrate usage data with productivity outcomes.

Finally, Cursor’s analytics are designed for engineering oversight, not enterprise-wide monitoring. Compliance teams may need alerts if employees use unapproved AI tools or a centralized view of AI activity for audits. A cursor alone cannot flag off-platform usage, such as employees experimenting with ChatGPT outside approved channels, nor can it enforce AI usage policies across different systems. Companies with these requirements often turn to external solutions that track AI usage at the organizational level, rather than just within a single application.

In short, Cursor’s built-in analytics are necessary but not always sufficient for enterprise AI governance. They give excellent insight into how engineers use Cursor itself, which is great for engineering managers measuring team adoption and coding impact. But strategic decision-makers (IT leadership, HR analytics, etc.) often require a more holistic and correlated dataset: one that spans multiple tools and ties usage to outcomes like productivity, quality, or even cost savings. This is where third-party analytics platforms come into play.

To achieve a comprehensive, organization-wide view of AI tool usage, many companies are turning to specialized analytics solutions. These platforms act as a central hub to monitor and analyze AI usage across all the different apps and services employees might be using. One leading example is Worklytics, which has positioned itself as a privacy-friendly “AI usage checker” for the workplace.

What is Worklytics? It’s an analytics platform that integrates with your company’s existing software ecosystem to surface behavioral insights – including how teams adopt AI. Worklytics can ingest usage logs and metadata from a wide array of tools (with pre-built connectors for many enterprise systems). Specifically, Worklytics provides comprehensive AI usage tracking by integrating with popular AI tools – including ChatGPT Enterprise, Microsoft 365 Copilot, GitHub Copilot, Google’s generative AI, and even developer tools like Cursor. In other words, if your organization uses a mix of AI platforms, Worklytics can pull in data from all of them and consolidate it into a single dashboard.

Key features that Worklytics (and similar platforms) bring to the table include:

Worklytics offers an out-of-the-box AI Adoption Dashboard that merges data from multiple sources into one view. For example, it can show AI engagement levels across engineering (Cursor, Copilot usage), customer support (a CRM AI assistant), and general staff (enterprise chatbot usage). You can filter by tool or team, compare trends week over week, and set targets. This unified perspective ensures leadership sees the whole picture of AI adoption rather than isolated reports per tool.

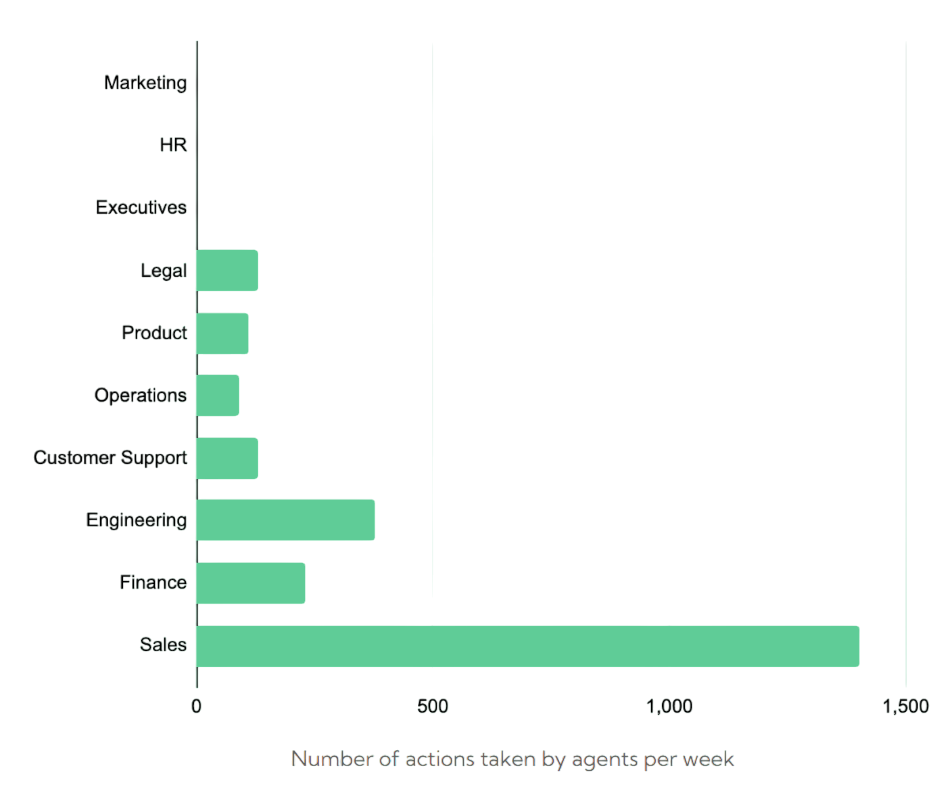

Because Worklytics ties into company directories and team structures, it can break down AI usage by department, role, or geography. Maybe your data science team is heavy on AI, but your finance team hardly touches it – the dashboard will make that clear. It automatically flags "power users" with high engagement and "lagging teams" where adoption is minimal. If the sales team’s AI usage is near zero, you might need to investigate blockers or provide targeted training for that group.

Unlike relying on self-reported surveys or manual tracking, Worklytics continuously ingests system logs and updates metrics daily. It uses pseudonymization and aggregation on these logs, meaning it processes frequency and action types without exposing sensitive personal details. This yields accurate, real-time insight into how work gets done, without burdening employees.

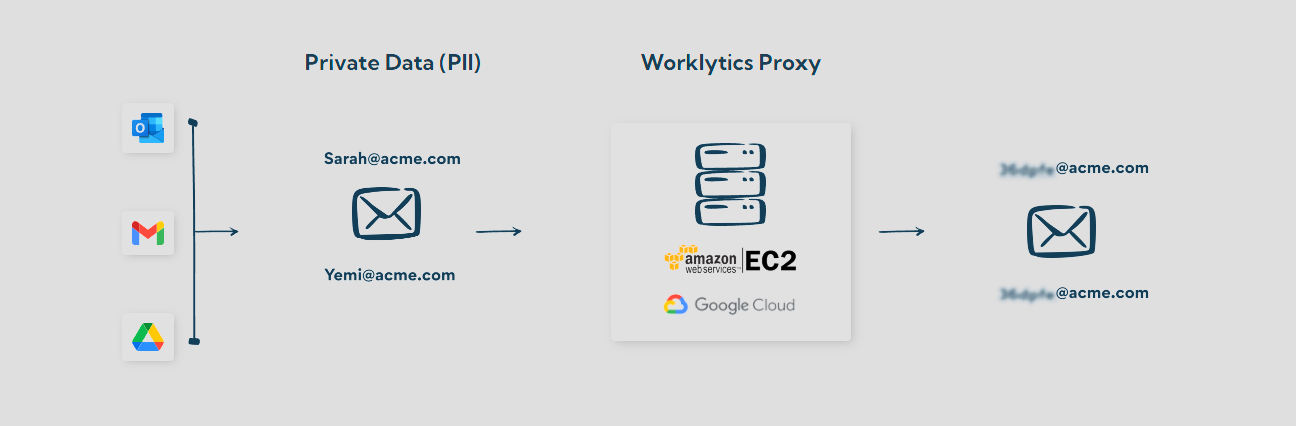

A major concern when tracking employee tool usage is privacy. Worklytics is built with a privacy-first design: it anonymizes data, never stores the content of AI prompts or outputs, and complies with GDPR and CCPA by default. The goal is to glean patterns (who is using AI and how often) without spying on the substance of anyone’s work. For example, Worklytics might show that a team averaged 50 AI queries per week, but not the queries themselves. This allows HR and compliance teams to support AI monitoring, knowing that employee privacy is respected. Unlike invasive monitoring software, Worklytics focuses on aggregate trends and metadata, not keystroke logging or screen capturing.

Perhaps most powerfully, Worklytics helps correlate AI usage with productivity outcomes. It can overlay adoption data with other performance metrics such as project throughput, ticket resolution times, or code review turnaround. For instance, after rolling out Cursor, you might discover that your support team handled 20% more tickets per week. Similarly, Worklytics can quantify whether higher Cursor usage in engineering aligns with faster release cycles or fewer bugs. These insights turn raw usage data into evidence of ROI, helping leaders celebrate wins and justify further AI investment.

While primarily an analytics tool, Worklytics can support compliance by identifying anomalies. It can highlight if someone is not using approved tools or suddenly starts using an unknown AI app. Its focus is on adoption trends, but paired with other tools, it can contribute to enforcing policies around sanctioned platforms.

In summary, third-party platforms like Worklytics serve as a strategic lens on AI usage across the enterprise. They fill the gaps left by tool-specific analytics like Cursor’s, providing a holistic, cross-tool view that ties usage to outcomes – all while respecting privacy. By using such a solution in tandem with Cursor’s native analytics, organizations get the best of both worlds: detailed Cursor-specific metrics for engineering management, and higher-level AI adoption dashboards for company-wide strategy.

In conclusion, tracking how employees utilize Cursor AI (and other AI tools) is about more than just monitoring activity – it’s about understanding and maximizing the value of these tools in your organization. By carefully measuring adoption, encouraging usage through support and best practices, and leveraging platforms like Worklytics to gather meaningful insights, you can ensure that AI assistance becomes a true asset rather than a black box. The era of AI in the workplace is here, and those who manage its adoption with clarity and purpose will lead the way in productivity, innovation, and employee empowerment. Worklytics can be the partner that helps you chart this course, turning AI usage data into smarter decisions and real business value.