Building resilient GenAI adoption dashboards requires architecting for API instability from the start. With vendor API uptime dropping to 99.46% and 75% of knowledge workers using AI tools, organizations need multi-provider pipelines, intelligent caching, and circuit breakers to maintain continuous visibility into AI adoption patterns even during outages.

• API downtime increased 60% year-over-year, with average uptime falling from 99.66% to 99.46% between Q1 2024 and Q1 2025

• Enterprise AI spending jumped from $2.3 billion in 2023 to $13.8 billion in 2024, reflecting the shift from pilots to production deployments

• Downtime costs average $9,000 per minute for large organizations when monitoring systems fail

• 71% of organizations now rely on third-party APIs from SaaS vendors, making multi-provider strategies essential

• A Fortune 500 firm achieved 28% adoption growth despite weekly API incidents by implementing resilient dashboard architecture

• EU AI Act violations can result in fines up to 7% of global revenue for non-compliant HR Tech systems using AI

Generative AI adoption has exploded across enterprises. But with API uptime dropping and vendor outages becoming more frequent, how do you maintain visibility into employee AI usage when the data pipelines themselves break?

The scale of AI adoption today makes reliable monitoring critical. Spending increased from $2.3 billion in 2023 to $13.8 billion in 2024 — a sixfold increase reflecting the shift from pilots to production deployments. With 75% of global knowledge workers now using AI tools regularly, organizations need real-time dashboards to track adoption across teams.

However, the infrastructure supporting these dashboards faces unprecedented strain. Average API uptime fell from 99.66% to 99.46% between Q1 2024 and Q1 2025, resulting in 60% more downtime year-over-year. This degradation directly impacts AI monitoring systems that depend on stable API connections to platforms like Slack, Microsoft Copilot, and Google Gemini.

Modern analytics platforms are evolving to give leaders real-time visibility into how AI tools are being adopted across their organizations. Yet when vendor APIs fail, these dashboards go dark — leaving organizations blind to adoption patterns at critical moments.

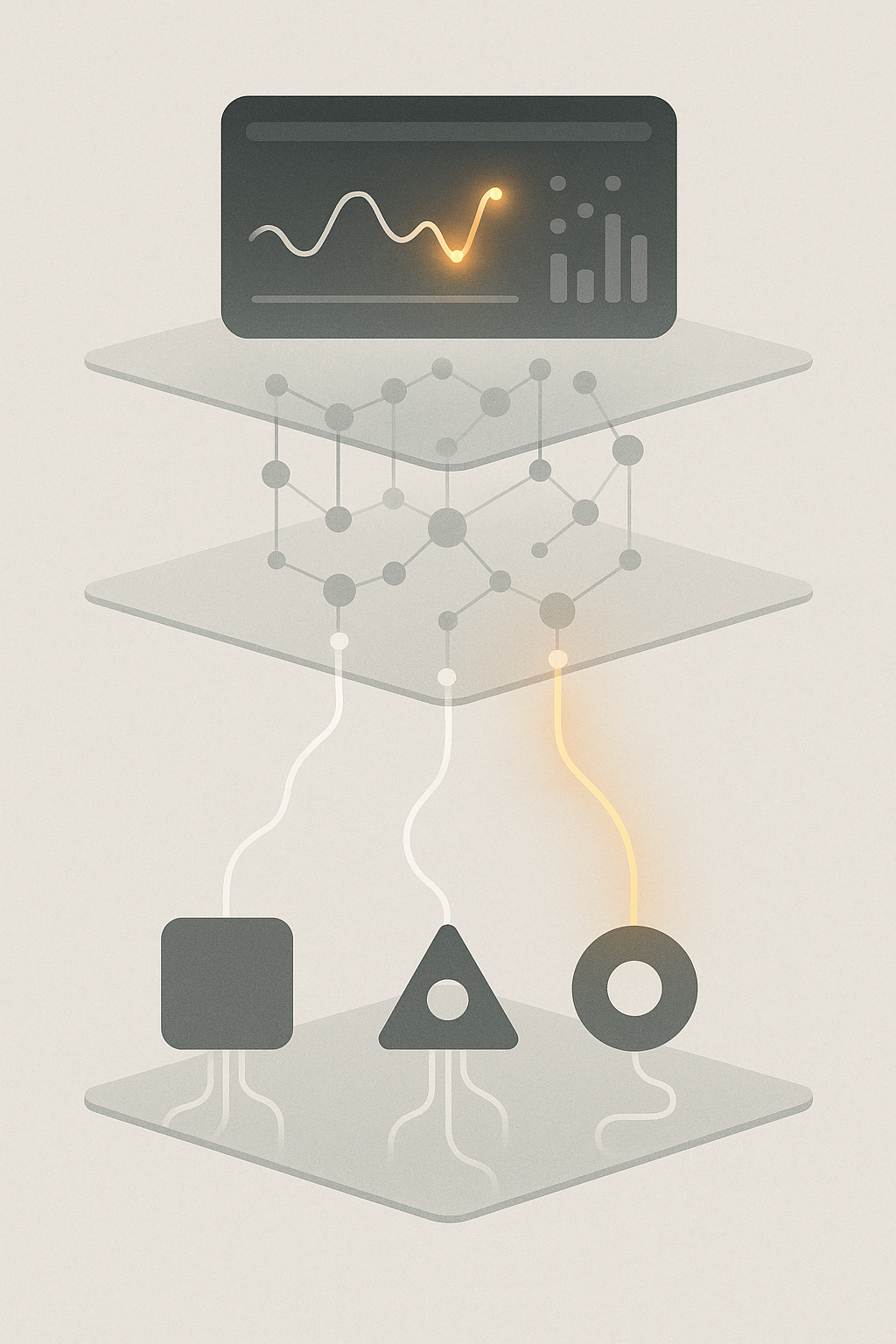

API failures create cascading problems for AI adoption tracking. With API downtime increasing 60% year-over-year, dashboards experience frequent data gaps that prevent accurate measurement of usage trends and productivity gains.

The business impact extends beyond simple monitoring gaps. Failures in OpenAI's ChatGPT take longer to resolve but occur less frequently than those in Anthropic's Claude, creating unpredictable monitoring blackouts. When dashboards lose connectivity, organizations miss critical signals about adoption plateaus or training needs.

The FAILS framework analysis reveals that LLM services now provide 17 types of failure patterns, including Mean Time to Recovery (MTTR) and Mean Time Between Failures (MTBF) metrics. These patterns show how vendor instability directly correlates with dashboard reliability issues.

Financial consequences compound quickly. Average downtime costs reach $9,000 per minute for large organizations — and when AI adoption dashboards fail, organizations lose visibility into investments worth millions in licensing and training.

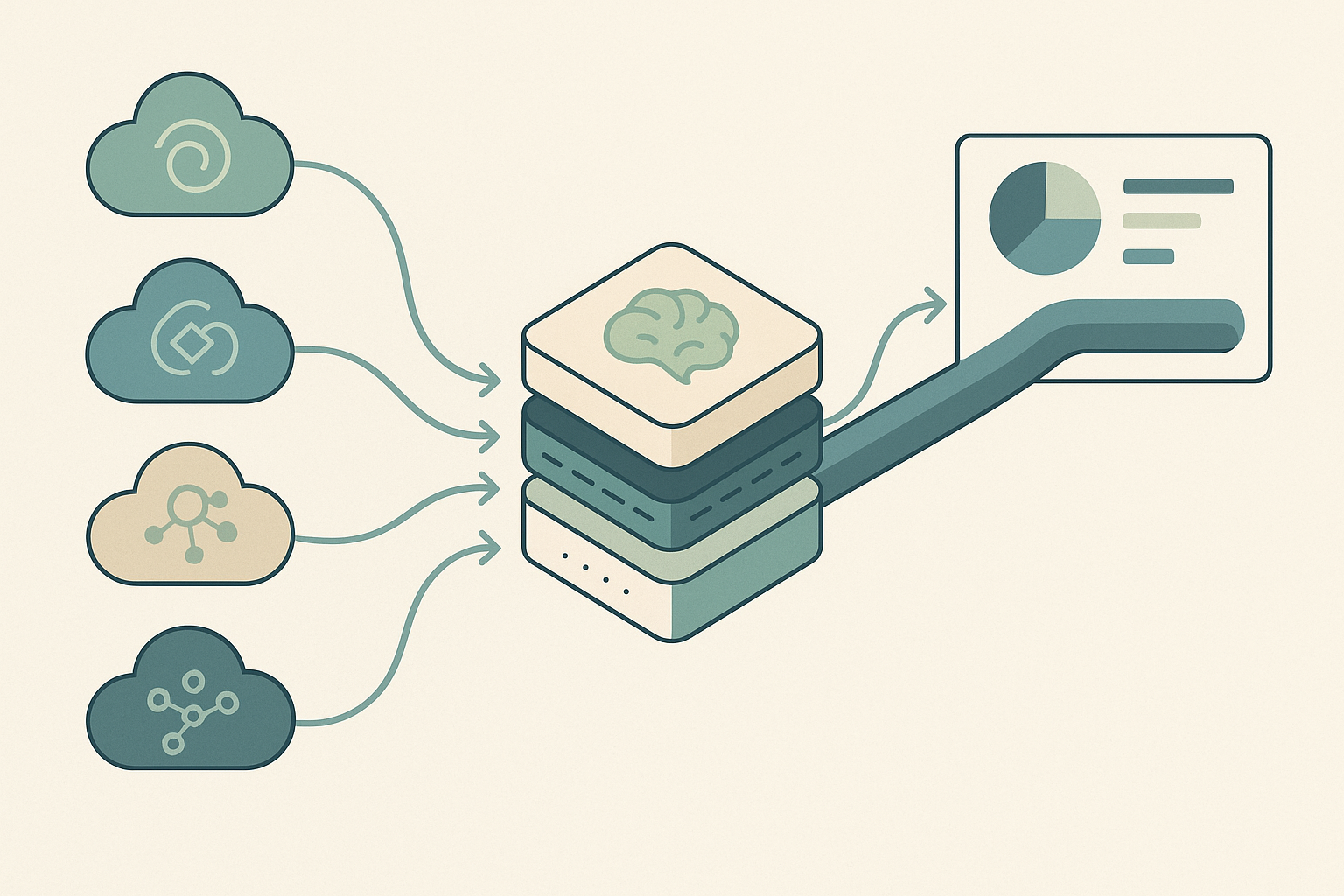

Instead of depending on a single AI provider, configure multiple providers with automatic failover. This provider-level resilience ensures dashboards maintain data flow even during complete vendor outages.

API gateways optimized for AI agents support context-aware workflows and standardized tool orchestration via the Model Context Protocol. Unlike traditional gateways built for client-server communication, MCP Gateways handle the unique requirements of AI monitoring systems.

Many teams are moving away from complex multi-agent systems toward simpler architectures centered on a single capable model orchestrating a rich set of tools. This approach reduces failure points while maintaining comprehensive monitoring coverage.

New AI gateways are emerging designed to manage, secure and protect API connections with AI providers. These specialized gateways include features like token management, response caching, and request guardrails that traditional API infrastructure lacks.

According to the 2024 Gartner API Strategy Survey, 71% of organizations now rely on third-party APIs from SaaS vendors, making multi-provider strategies essential for maintaining dashboard uptime.

Lunar.dev adds only 4 milliseconds of latency at the 95th percentile while providing critical failover capabilities — proving that resilience doesn't require sacrificing performance.

Provider level fallbacks work best when combined with circuit breakers. These patterns prevent cascading failures by automatically routing traffic away from degraded endpoints before complete outages occur.

A multi-layer caching strategy is essential for maintaining dashboard continuity. Cache frequently accessed metrics at multiple levels — from raw API responses to processed analytics — ensuring dashboards display recent data even during extended outages.

Light vs. Heavy Usage Rate segments users based on AI intensity, remaining measurable through cached data even when real-time feeds fail. These patterns persist across outages, providing continuity for trend analysis.

Around 80% of AI users report productivity improvements — a metric that correlates with historical usage patterns stored locally. Organizations can extrapolate these gains during API downtime using baseline measurements.

MTTR measures total resolution time from investigation to recovery, while MTBF calculates average time between consecutive failures. These reliability metrics help organizations assess which data sources provide the most stable monitoring foundation.

Most HR Tech systems using AI are classified as high-risk under the EU AI Act, with violations fined up to 7% of global revenue. Dashboard systems must maintain compliance even when switching between data sources during outages.

Microsoft Purview DSPM for AI provides central management for securing AI data and monitoring usage, automatically running weekly risk assessments for the top 100 SharePoint sites. This governance layer ensures compliance regardless of API availability.

Worklytics data is fully anonymized and content-free, helping compliance teams craft approved use policies with confidence. This privacy-first approach maintains regulatory compliance even when failover systems activate.

From a DevOps perspective, enabling observability in agents is necessary for ensuring AI safety. Stakeholders gain insights into agents' inner workings, allowing them to proactively detect anomalies and prevent potential failures before they impact dashboards.

FAILS supports researchers and engineers to understand failure patterns and mitigate operational incidents in LLM services. The framework provides comprehensive analysis including temporal trends and service reliability metrics.

35% of businesses now use end-to-end API monitoring — a critical capability for detecting silent failures where APIs return incomplete data without triggering error states.

A Fortune 500 financial services company struggled with fragmented AI adoption across its 15,000-employee workforce. API instability from multiple vendors threatened to derail their monitoring efforts.

Within one quarter of implementing a unified dashboard with built-in resilience, the company achieved a 28% increase in overall AI adoption across all departments — despite experiencing weekly vendor outages.

Processed data flows into Power BI through Worklytics' DataStream service, enabling real-time dashboard updates. The multi-provider architecture maintained 99.5% dashboard availability even as individual vendor APIs experienced significant downtime.

Building resilient GenAI adoption dashboards requires accepting that API instability is the new normal. Organizations that architect for failure — with multi-provider pipelines, intelligent caching, and privacy-compliant fallbacks — maintain visibility even as individual vendors experience outages.

Worklytics empowers organizations to measure productivity, collaboration, and engagement using ethical, privacy-first analytics. By combining robust data architecture with comprehensive monitoring, organizations can track AI adoption reliably regardless of vendor stability.

The path forward involves treating dashboard resilience as a core requirement, not an afterthought. With proper architecture and tools like Worklytics, organizations can maintain continuous insight into their AI transformation journey — turning API instability from a critical weakness into a manageable operational challenge.

API instability can lead to frequent data gaps in dashboards, preventing accurate measurement of AI usage trends and productivity gains. This instability can result in organizations missing critical signals about adoption plateaus or training needs.

Organizations can design resilient data pipelines by configuring multiple AI providers with automatic failover, using API gateways optimized for AI agents, and implementing multi-layer caching strategies to maintain data flow and dashboard continuity during outages.

Metrics like Light vs. Heavy Usage Rate and productivity improvements can still be measured through cached data during API outages. These metrics provide continuity for trend analysis and help organizations extrapolate gains using baseline measurements.

Worklytics ensures privacy and compliance by fully anonymizing and content-free data, helping compliance teams craft approved use policies. This privacy-first approach maintains regulatory compliance even when failover systems activate.

API downtime can cost large organizations an average of $9,000 per minute. When AI adoption dashboards fail, organizations lose visibility into investments worth millions in licensing and training, highlighting the financial impact of such outages.