In the rush to leverage artificial intelligence at work, employees aren’t always waiting for official approval. Shadow AI refers to the unsanctioned use of AI tools or applications within an organization, without IT or management oversight.

In practical terms, this means a team member might use a generative AI service like ChatGPT or a coding assistant on their own initiative to speed up tasks – without informing IT or obtaining permission.

Shadow AI has exploded alongside the accessibility of generative AI. According to Microsoft’s 2024 Work Trend Index, 75% of global knowledge workers are already using AI in their jobs, and tellingly, 78% of those AI-savvy employees are “bringing their own AI” tools into work rather than relying solely on IT-provided solutions.

Why the stealth? Typically, shadow AI use isn’t malicious. Employees turn to unofficial AI tools to boost productivity or solve problems quickly, especially if the official tools provided feel inadequate or slow. These individuals are usually trying to do their jobs better or faster – but by sidestepping IT oversight, they create a new set of challenges for the organization.

The rise of shadow AI in workplaces isn’t happening by accident. It results from multiple converging forces, including growing productivity demands, rigid IT policies, and employees’ desire to experiment and stay competitive. Together, these dynamics create the perfect environment for unauthorized AI use to flourish.

AI is no longer locked behind tech departments or coding skills. With just a few clicks, anyone can access powerful tools online. This ease of use fuels curiosity and experimentation — employees naturally want to see how AI can help them work smarter, faster, and better. When innovation is only a sign-up away, it’s hard to resist trying it out.

In modern workplaces, the constant pressure to accomplish more in less time is a prevalent reality. AI feels like the ultimate shortcut — automating tasks, summarizing information, and generating ideas in seconds. So when company tools fall short, employees often turn to AI on their own, chasing that edge in speed and efficiency. Shadow AI often starts as a simple desire to get ahead.

Shadow AI often fills a void left by the organization. Perhaps the company hasn’t yet rolled out approved AI solutions, or the ones in place don’t cover a team’s specific needs. Eager to innovate, teams might experiment with external AI platforms to fill gaps in functionality.

Corporate processes can move at a crawl. Between approvals, security checks, and compliance reviews, months can pass before a tool is approved for use. For employees under pressure, waiting that long is too long. They act fast, adopting AI tools on their own and hoping for forgiveness later. Without clear AI policies, people rely on their own judgment, which can blur the lines of what’s allowed.

Some company cultures encourage creativity and autonomy, sometimes to an excessive degree. When “thinking outside the box” is rewarded, employees may view unofficial AI use as an initiative rather than a risk. What starts as a clever solution can quietly evolve into shadow AI. In many ways, it’s a byproduct of ambition and innovation meeting a lack of oversight.

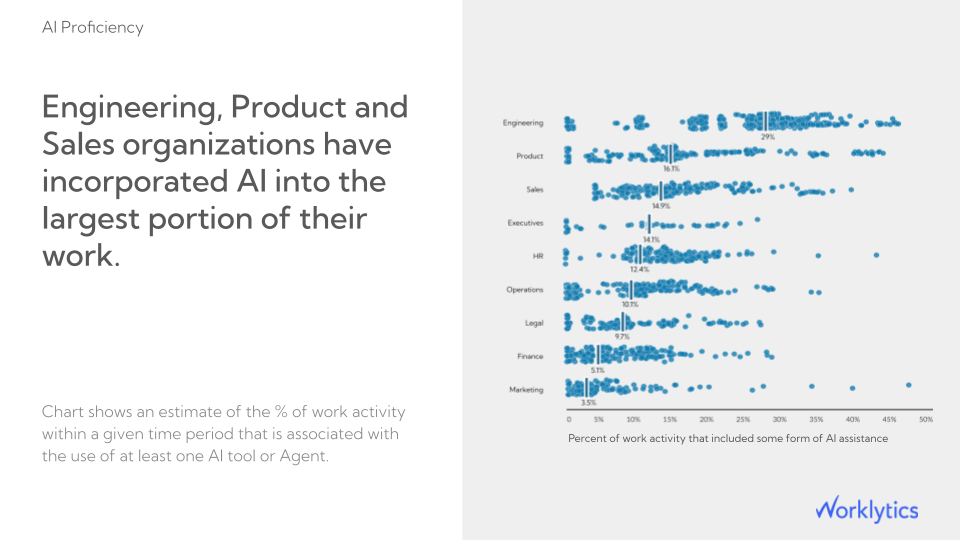

Shadow AI isn’t confined to tech experts. From HR and marketing to leadership and operations, employees across the board are exploring AI to make their work easier and more impactful. The motivation is universal: to perform better, move faster, and stay competitive. It’s not about breaking rules; it’s about keeping up in an AI-driven world.

While the benefits of shadow AI (speed, innovation, agility) are enticing, the practice also poses significant risks. When AI tools are adopted without oversight, organizations face a blind spot that can lead to technical, ethical, and business pitfalls. Here are the key challenges that shadow AI poses:

The biggest risk of shadow AI is data exposure. When employees use external tools, they may unknowingly share sensitive information with systems outside the company's control. Many AI platforms store user inputs, putting proprietary data at risk. In regulated industries, even small leaks can trigger penalties and erode customer trust.

Unapproved AI tools make it difficult for compliance teams to track where data goes or how it’s handled. Employees may inadvertently violate privacy laws or transfer information across borders without being aware of the consequences. As AI regulations demand transparency, hidden usage can lead to fines, legal issues, and intellectual property misuse.

Unvetted AI systems often generate flawed or biased results. When employees rely on them, the company risks making poor decisions, spreading misinformation, or incurring reputational harm. Without oversight, these outputs can undermine credibility and introduce ethical issues that are difficult to rectify.

Mishandled data or unreliable AI results can quickly damage a company’s reputation. Clients may lose confidence, and employees might grow frustrated if others secretly use AI tools. Once trust is broken, it becomes difficult to rebuild both internally and externally.

Shadow AI creates fragmentation within the organization’s tech environment. Independent tool adoption leads to security gaps, redundant costs, and missed updates. Without visibility, IT cannot manage or integrate these tools effectively, weakening the overall system.

Hidden AI uses cloud visibility into how work gets done. Leaders may misread productivity trends or overlook training needs if the impact of AI goes unnoticed. Understanding actual AI use helps organizations encourage innovation while maintaining secure and compliant operations.

In summary, shadow AI presents a classic risk-vs-reward tradeoff. On one hand, you have employees supercharging their productivity and creativity; on the other, you have uncontrolled risks swirling beneath the surface.

Completely stamping out shadow AI is unrealistic (and arguably counterproductive). Much like the shadow IT wave that preceded it, the solution isn’t to ban everything, but to bring unsanctioned usage into the light and manage it effectively. The goal for organizations should be to enable the benefits of AI for employees in a safe and governed manner. Here are strategies to manage shadow AI effectively:

Begin by defining what is and isn’t acceptable when it comes to AI use at work. Many employees turn to shadow AI because there are no clear rules. A strong policy should outline approved tools, data handling protocols, and restrictions on the use of sensitive or proprietary information. It should also address intellectual property, content originality, and ethical use. Make the policy practical and easy to understand, and communicate it through regular training and internal channels so that everyone is aware of the boundaries.Offer Approved Alternatives and Tools

The best way to prevent unauthorized AI use is to provide trusted options. Deploy company-approved tools that meet the needs of different teams, such as writing assistants, design platforms, or data analysis systems. When employees have access to secure, vetted tools, they are less likely to seek out unapproved ones. Promote these tools actively so teams understand their benefits and know they are supported by IT and security teams.Create a Simple Approval Process for New Tools

Since AI evolves quickly, employees will inevitably find new tools they want to try. Instead of enforcing blanket bans, establish a fast and transparent approval system. A simple submission form or review channel can enable IT or security teams to quickly evaluate tools. By offering a clear path for approval, employees feel empowered to innovate while staying compliant. This approach maintains high visibility and reduces the temptation to circumvent the rules.Educate and Build Awareness

Knowledge is one of the best defenses against shadow AI risks. Offer regular awareness sessions to help employees understand both the benefits and the dangers of using AI without oversight. Teach best practices such as anonymizing data, verifying AI outputs, and disabling data collection where possible. Maintain a positive and empowering tone, emphasizing collaboration over punishment. When people understand why responsible use matters, they are more likely to follow guidelines.Monitor and Gain Visibility

You can only manage what you can see. Implement systems to track AI activity across the organization while respecting employee privacy. Modern monitoring tools can detect connections to AI platforms and flag unusual data behavior. Use insights from monitoring to identify where employees are turning to unapproved tools and why. This helps IT and leadership refine policies, close gaps, and better support employees who rely on AI for their work.Implement Guardrails, Not Roadblocks

Striking a balance between safety and flexibility is essential. Instead of banning AI tools outright, create secure environments where employees can experiment responsibly and safely. Utilize technical controls, such as privacy filters, role-based access, and sandbox testing areas, to minimize risk. By setting clear boundaries and offering safe alternatives, organizations can encourage innovation without compromising security.Continual Review and Adaptation

Shadow AI is constantly evolving, so your strategy must evolve accordingly. Regularly assess which tools employees are using and update your approved list as needed. Stay alert to new regulations and shifts in AI technology to keep policies relevant. Establish a cross-functional AI governance group that reviews developments and adapts policies accordingly. Staying proactive ensures that AI remains a strategic advantage rather than a source of risk.

Above all, the message to convey within your organization is that AI is welcome, but it must be used responsibly. Employees should feel they can get the benefits of these exciting tools with the company’s support, not in spite of it. In fact, encouraging responsible use of AI and acknowledging its benefits can turn a potential rogue behavior into a competitive advantage. Companies that successfully navigate shadow AI will be those that neither overreact with fear nor ignore the risks – but chart a middle path that harnesses employee innovation while protecting the enterprise.

One practical challenge in managing shadow AI is getting visibility into how AI is being used across the enterprise. This is where people analytics and usage insights come into play. Worklytics is an example of a solution that can help organizations tackle the shadow AI problem head-on. It provides tools to monitor and analyze AI adoption in a privacy-conscious way, giving stakeholders data-driven insights rather than guesswork.

Worklytics helps organizations understand how employees use their digital tools by aggregating data from collaboration platforms, calendars, code repositories, and communication systems. This creates a clear picture of normal tool usage and highlights any unusual behavior. For example, its dashboards can show how much teams rely on approved AI tools, such as Microsoft Copilot or Google Workspace AI. If one department shows very little usage, it could indicate they’ve turned to unapproved tools instead—a sign of potential shadow AI. On the other hand, high-performing teams using AI effectively can provide valuable examples for others to follow.

One of Worklytics’ strengths is identifying patterns that suggest unsanctioned AI activity. By comparing expected usage baselines to actual data, it can flag inconsistencies. For instance, if the marketing team demonstrates significantly more AI-driven work than what the official tool accounts for, that gap could indicate the use of external, unapproved tools. These anomalies serve as early warning signs. Worklytics essentially builds a behavioral model for normal AI activity, then detects when usage spikes or shifts beyond those norms.

For HR and people analytics teams, Worklytics provides valuable visibility into how AI impacts the workforce. It answers key questions like which teams are leading in AI adoption, where support or training is needed, and how AI usage correlates with productivity. Because Worklytics aggregates metadata without exposing personal content, it maintains employee trust while offering actionable insights. This allows organizations to monitor AI responsibly, focusing on patterns and performance rather than individuals.

From an executive perspective, Worklytics connects AI usage directly to business outcomes. Leaders can see where shadow AI is emerging and address it before it becomes a risk, or identify teams that are achieving measurable gains through AI. This transparency helps leadership make informed decisions about investments, policies, and enablement strategies. Ultimately, it bridges the gap between employee innovation and organizational control, ensuring AI adoption remains both safe and effective.