Artificial intelligence is reshaping businesses across every industry, but measuring the return on investment (ROI) of AI initiatives remains a critical challenge.

Companies are pouring significant resources into AI tools – often spending six or seven figures annually on licenses – yet many struggle to quantify the benefits in concrete terms.

In fact, recent data shows that while over 95% of U.S. firms have adopted generative AI, roughly 74% have yet to achieve tangible value from these investments. This gap between enthusiasm and actual results has executives asking a tough question: Are our AI investments truly paying off?

Tracking and improving AI ROI is essential not only to justify these expenditures but also to optimize AI use for maximum business impact.

For business leaders, ROI is the language of value. Understanding the ROI of AI initiatives helps connect AI projects to real business goals and outcomes.

It also guides investment prioritization, spotlighting which AI use cases deliver the most value relative to their cost. In practice, demonstrating ROI can mean the difference between an AI pilot remaining a small experiment or scaling into a core part of the business.

When stakeholders see hard numbers – like improved operations or higher customer retention due to AI – they gain confidence to expand successful projects. Moreover, measuring ROI can support change management: employees are more likely to embrace AI tools when they see improvements in productivity or workload, easing concerns about new technologies.

In short, tracking ROI isn’t just an accounting exercise; it’s about ensuring AI adoption translates into measurable business value that everyone can rally behind.

Tracking ROI starts with defining the right metrics and data collection methods. You need to connect what the AI is doing (usage and performance) to outcomes that leadership cares about. The key is to link AI usage to productivity improvements and ultimately to business outcomes. Here are the core metrics and methods to consider:

Begin by measuring how extensively AI tools are used across the organization. Key indicators include the number of active users, frequency and duration of use, and feature utilization rates.

For example, tracking Daily Active Users (DAU) for each AI application helps distinguish one-time experimentation from consistent, habitual use.

These adoption metrics show whether the workforce is actually embracing AI tools.

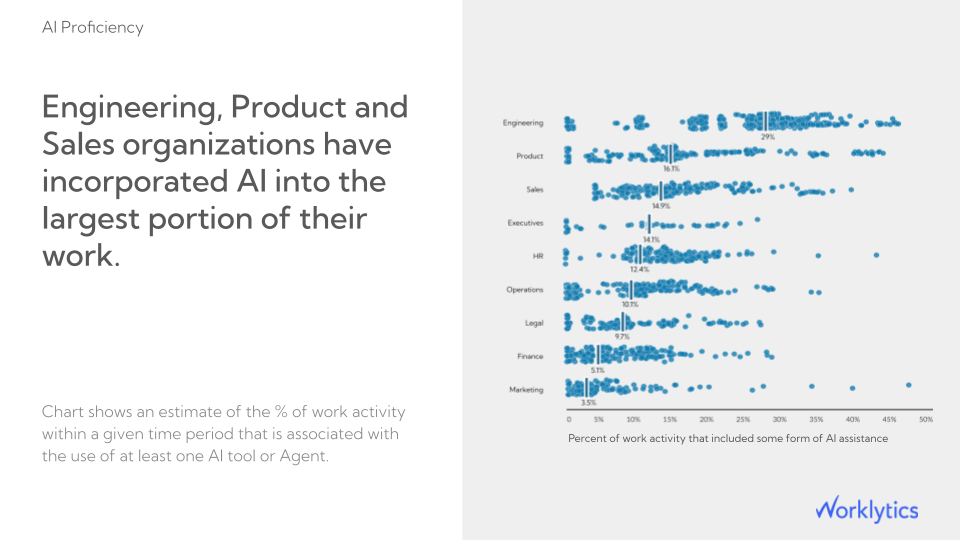

It’s also useful to segment usage data by team or role. You might find, for instance, that your engineering department heavily uses an AI coding assistant, whereas the sales team’s use of an AI CRM add-on is low. Such insights highlight where AI adoption is thriving or lagging, allowing targeted interventions.

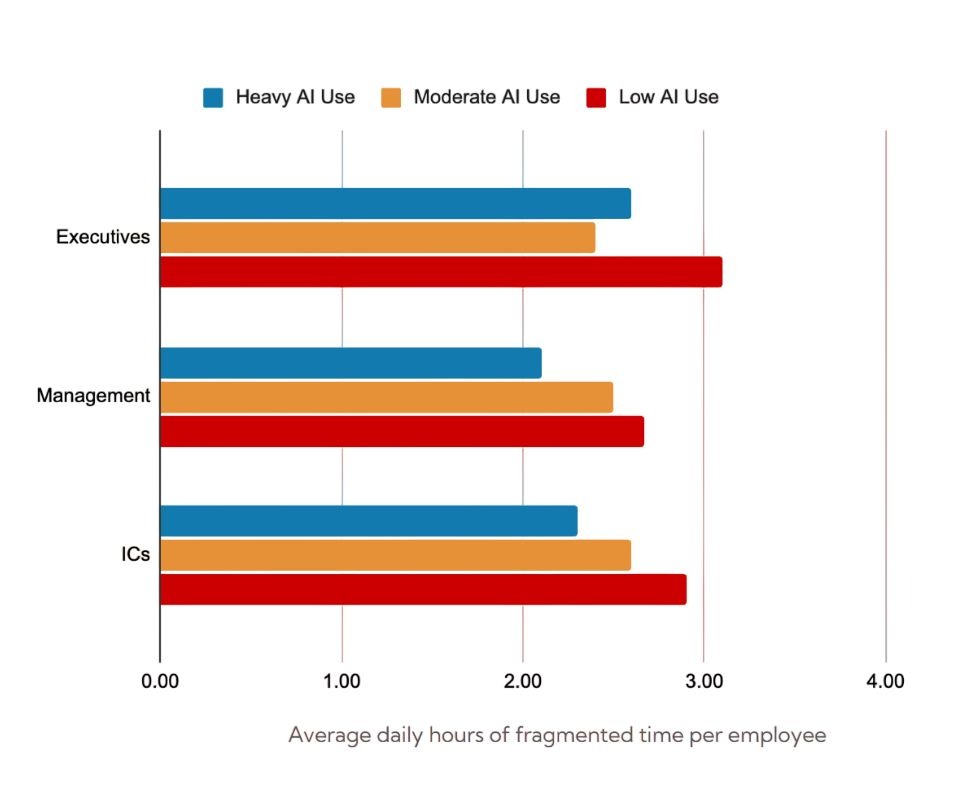

Next, connect AI usage to operational performance indicators. Ask how AI is changing the way work gets done. Time-based metrics are especially telling – for instance:

If an AI tool automates report generation, measure how much it cuts down preparation time. Error rates and quality metrics are relevant too – does the AI reduce mistakes in outputs (code errors, manual data entry errors, etc.)?

Early on, many of these improvements will appear as efficiency gains. Over time, these efficiency metrics should translate into more output (e.g., more code written, more sales calls made) or higher-quality work, which sets the stage for financial gains.

Finally, link these operational improvements to bottom-line results.

When assessing the business impact of an AI initiative, begin by identifying the Key Performance Indicators (KPIs) it is designed to improve. These serve as the foundation for tracking success over time. Focus on hard ROI metrics such as:

In addition to financial metrics, include employee-related indicators as part of your “soft ROI.” These capture the less tangible but equally important benefits of AI, such as:

Although these outcomes may not appear immediately in financial statements, they contribute significantly to long-term organizational performance and culture.

Finally, connect these measurements into a coherent narrative. Correlate AI usage and productivity data with business outcomes to show clear cause and effect. For example:

“AI tool X reduced processing time by 20%, allowing the team to complete two additional projects this quarter and increasing revenue by 5%.”

By establishing these links and tracking them consistently, you not only demonstrate ROI but also reveal how AI strengthens both short-term results and long-term organizational health.

Watch out for what’s called workslop: fake productivity that looks busy but creates little value. The goal is not to produce more content, but to create real outcomes. Always connect AI activity to results such as time saved, better quality, or faster delivery.

Tip: Start with a baseline. Before rolling out an AI solution, capture the numbers for your key metrics (e.g., average handle time, monthly sales, error rate) before AI deployment.

This baseline allows you to compare “before vs. after” and attribute improvements to the AI initiative.

Tracking metrics is only half the battle – the other half is optimizing your AI investments to maximize ROI. If your analysis shows less-than-stellar returns, it’s time to adjust strategy. Here are several best practices to help improve the ROI of your AI initiatives:

Focus on AI initiatives that directly support core business objectives like revenue growth, cost reduction, or improved customer experience. Identify clear use cases that address pain points or opportunities, and validate ideas with small pilots before scaling. Avoid projects that don’t clearly tie to measurable business outcomes.

AI ROI often suffers from hidden costs. Consider the full range of expenses, not only software licenses but also data preparation, infrastructure, employee training, and ongoing maintenance. Be aware and plan for these from the start, and reuse effective existing tools or platforms. Evaluate whether to build or buy, since third-party solutions can sometimes deliver faster, cheaper value. Careful cost control protects long-term ROI.

AI delivers value only when employees use it regularly and effectively. Build adoption through structured onboarding and continuous learning. Offer hands-on training sessions that show employees how AI fits into their daily workflows, such as how a sales representative can use AI to automate lead scoring or how a marketing team can use it to generate campaign reports.

Treat AI as a continuous process. Track performance against initial targets and adjust accordingly. If usage or outcomes are lower than expected, find out why and act on it. Improve training, adjust models, or refine the use case. Scale successful pilots and redirect resources from low-performing ones. Continuous review ensures lasting ROI.

Avoid expecting immediate, dramatic returns. Many AI projects take 6 to 12 months to mature. Not every time-saving will directly translate into extra output, and some benefits, such as quality improvements or better decision-making, take longer to show. Focus on both short-term efficiencies and long-term strategic value.

In summary:

Maximizing AI ROI means choosing the right projects, managing costs, ensuring adoption, iterating continuously, and taking a realistic, long-term view. When AI is aligned with strategy and integrated thoughtfully into workflows, its value compounds over time.

Worklytics helps organizations accurately measure and improve the ROI of their AI initiatives by providing clear visibility into adoption, productivity impact, and performance outcomes. As a people analytics platform, it turns real-time work data into actionable insights that connect AI usage to measurable business results.

Worklytics integrates with tools like Slack, Zoom, Microsoft 365 Copilot, and ChatGPT Enterprise to show exactly how AI is being used across teams. It consolidates adoption data by department, role, or location, helping leaders see where engagement is high or where additional support is needed. For example, if engineering shows strong usage of AI coding tools while marketing adoption is low, managers can immediately target training or communication to close the gap. Tracking these trends over time provides a clear view of progress toward adoption goals.

Worklytics provides dashboards that show how effectively teams are using AI tools across the organization. These insights highlight metrics such as frequency of use, feature adoption rates, and depth of engagement with AI assistants. Leaders can easily identify which teams are proficient with AI and which need additional training or support. Tracking these metrics over time helps measure progress in AI skill development and ensures employees are using tools to their full potential.

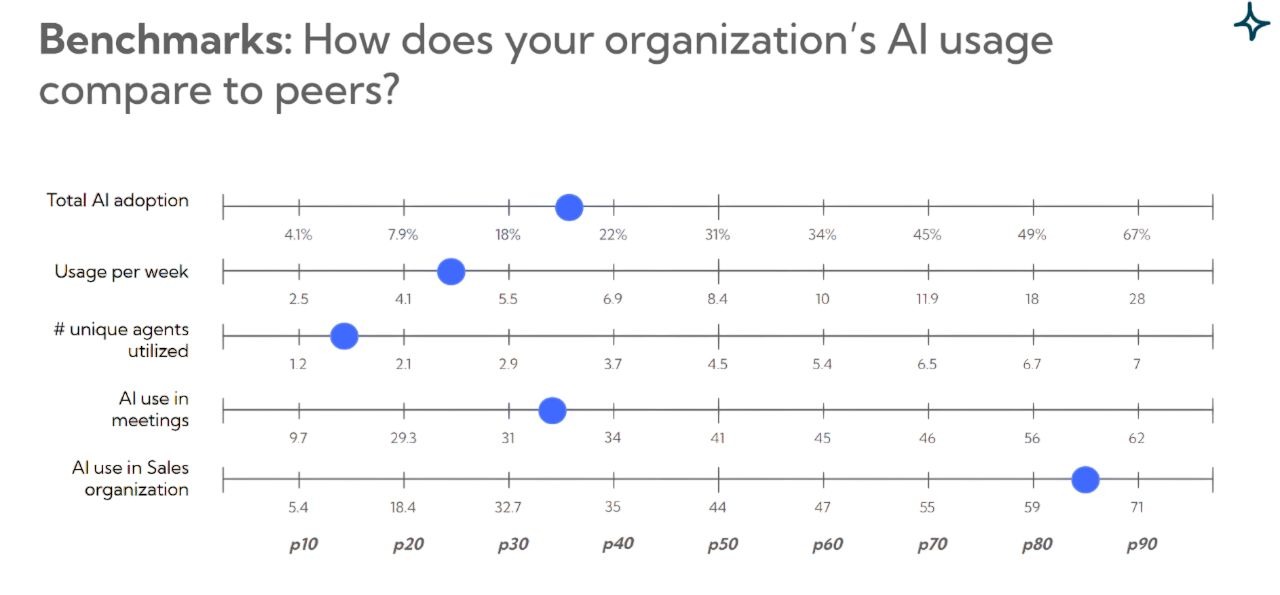

Worklytics enables benchmarking against industry peers, showing how your organization’s AI adoption and performance compare with others in similar sectors. This context helps explain ROI results and sets realistic improvement goals. If adoption or productivity metrics trail competitors, leaders can prioritize enablement; if they lead the field, they can replicate success elsewhere. Worklytics’ upcoming benchmarking insights (expected in 2025) will highlight best practices from top-performing organizations to help guide AI strategy.

Beyond tracking, Worklytics identifies where AI tools are underused and who your “power users” are. This helps managers focus training where it matters most. If certain departments or regions show limited engagement, Worklytics flags those gaps so teams can organize targeted workshops or share best practices from high performers. This feedback loop ensures higher adoption and more consistent, effective use of AI across the organization.

Worklytics helps you move beyond surface-level metrics and uncover the true impact of AI across your organization. From adoption to business outcomes, it’s your single source of truth for AI performance.

Discover how Worklytics can help you maximize ROI.