Performance reviews are a vital tool to help software development teams grow and succeed in the coming year. At the start of a new year, many organizations turn to 360-degree reviews are a good way to do this but they can be challenging to run effectively. When done well, these reviews provide valuable insights; when done poorly, they can become time-consuming and usually fail to provide people with meaningful feedback. It’s worth investing effort to ensure that they are a good use of time.

Designing effective reviews for product development teams is a particularly challenging task it must deliver actionable feedback to specialized roles, such as software engineers, UX/UI designers and product managers.

Too often companies rely on the same generic review process for all employees. This is generally ineffective, as people in technical roles require in-depth feedback on the technical skills they need to succeed in their positions.

Over years managing software development teams we have experimented on and evolved several technical review processes. Below are some of the ideas and strategies we found most effective.

In many companies, the review process is centrally controlled, usually by the HR department. For the sake of simplicity, HR departments often apply the same generic review process to the entire organization. Collecting unstructured feedback with a small number of open-ended prompts such as ‘List Jane’s strengths’ or, even worse, ‘How do you think Jane did this quarter?’. These types of reviews are ineffective at evaluating technical roles for a few reasons:

Open-ended questions are difficult for reviewers to complete since they don’t set clear expectations or guidelines for what information to provide. They effectively ask the reviewer to come up with a definition of what constitutes expected behavior and performance.

The lack of guidance they provide means response quality will vary wildly. Some reviewers will provide lots of detailed feedback, while others only the minimum required.

When questions are too broad, reviewers tend to fall back on personal impressions. HR experts warn that purely subjective or oral evaluations are prone to misinterpretation and legal risk. It is far better to avoid subjectivity by using written, precise performance standards, since purely open comments can be misunderstood by either party

Worst of all is the fact that this input is very difficult to process and provide as effective feedback at the end of the review. To illustrate the point, imagine a manager with 5 reports, each being reviewed by 3 peers, 1 manager and themselves. That’s a total of 25 blocks of unstructured text someone has to process at the end of the review. Take that one level up the hierarchy, to the manager’s manager, and that could be 125 blocks of unstructured text someone needs to process to really understand how a team is doing.

In summary, generic and unstructured performance reviews set everyone up for frustration. They overburden reviewers with ambiguous questions, produce uneven feedback, and make it difficult for leaders to extract any consistent meaning. These pitfalls underscore the need for a more structured and role-specific approach to evaluating technical team members.

The most meaningful feedback comes from a review process that zeroes in on the specific skills and responsibilities required for each person’s role.These skills vary greatly by role, seniority and organization. They may also evolve over time within a specific company, as the requirements for success change.

The more you can target review content for each subject’s particular role and situation, the more actionable the feedback will be.

By aligning the review with the up-to-date job description and expectations of a given role, you ensure the feedback is measuring what actually matters for success.

For software engineers that may include reviewing specific skills like problem solving in code, code structure and technical definition. Whereas for product managers you might review skills such as product design* and ability to communicate a compelling product vision. Structuring review content and tailoring it to the review subject, makes it far easier for reviewers to respond to questions. They'll know exactly which aspects/skills to evaluate. It also means that extracting meaningful feedback at the end of the review process is quick and easy.

How questions are asked in a review is just as important as what is asked. The best review systems strike a balance between structured questions (which provide consistency and focus) and open-ended prompts (which allow nuance and explanation). Each format has strengths and weaknesses, so a combination of both yields the richest feedback.

Structured questions typically use a multiple-choice or rating-scale format. For example, a review form might ask a peer to rate an engineer’s code quality on a scale from 1 to 5, or to choose to what extent they agree with a statement like “Jane’s code reviews are thorough and constructive.”

Structured items like these offer several benefits:

Another issue with multiple choice questions is that they can be too easy to complete. Reviewers may speed through a review without giving enough thought to responses. To avoid these issues we’ve found that it’s best to intersperse sets of structured (multiple-choice) questions with open-ended feedback ord open-text inputs.

Open-ended questions or open-text inputs give reviewers the freedom to elaborate in their own words. They encourage a more reflective, narrative response – something ratings alone can’t capture.

A few sentences of written feedback can shed light on the specific situations, behaviors, and outcomes behind a performance score.

The optimal approach is to design review instruments that use structured questions with an optional comment after each section. A proven format is to present 3–5 skill-specific multiple-choice questions in a row, then follow up with a prompt such as: “Please provide additional comments as rationale for your answers above.”

A 360-degree review (or multi-rater feedback) incorporates input from an employee’s peers, direct reports, and sometimes customers, in addition to the traditional top-down feedback from their manager. Including peer perspectives is widely considered a best practice in modern performance management, especially for roles that are highly collaborative. Whereas a manager-only review gives just a single point of view, a well-run 360 review paints a comprehensive picture of performance.

360-degree reviews must be designed thoughtfully to truly add value. Simply collecting a haphazard set of peer opinions can introduce new problems (like conflicting feedback or personal bias) if not managed well. Here are some best practices to maximize the benefits of 360° feedback while avoiding pitfalls:

Technical skills are notoriously difficult to evaluate objectively. The perception that a review has not been fair generally results in people not taking feedback seriously and feeling demotivated by the process.

To ensure that reviews are as objective as possible, it's important to take a step back and review the actual work someone has completed over time. Asking questions like What were a person's real achievements relative to the goals they set over the period? Were they well equipped and in a position to effectively deliver on their goals?

This goal review step can be incorporated as part of the core review process or as it can be a separate process altogether. Managing technical goals and OKRs is a lengthy topic and we'll cover in more detail at a later stage. For now, I'll say that this is an essential part of reviewing any product development team.

While structured reviews, 360-degree feedback, and goal alignment form the foundation of effective performance management, organizations can further strengthen their processes with the right analytics tools. Worklytics provides data-driven insights into team behavior and collaboration patterns that can significantly improve the quality and fairness of performance reviews.

One of the persistent challenges in reviews is mitigating bias and subjectivity. Managers and peers may unintentionally evaluate based on personal impressions rather than measurable performance. Worklytics helps counter this by providing objective metrics on work patterns, such as:

By grounding reviews in actual behavioral data, managers can ensure feedback is supported by facts rather than opinions, leading to reviews that feel fairer and more credible.

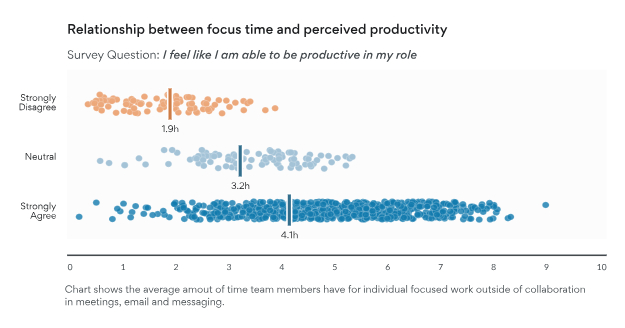

Instead of waiting for quarterly or annual review cycles, Worklytics surfaces ongoing trends that allow managers to deliver real-time coaching. For example, if a team shows early signs of burnout from excessive after-hours work, or if collaboration metrics drop sharply, leaders can address the issue immediately rather than waiting until the next review. This aligns with modern research showing that frequent feedback improves engagement and retention, especially among knowledge workers.

Performance reviews are most effective when they focus on measurable outcomes. Worklytics integrates with goal-tracking frameworks such as OKRs, making it possible to connect daily work patterns with broader objectives. This enables managers to evaluate not just what was achieved, but also how it was achieved—for instance, balancing high output with sustainable workload distribution and cross-team collaboration.

Beyond evaluation, reviews should guide professional growth. Worklytics highlights skill-related trends, such as whether engineers are spending sufficient time on innovation versus maintenance, or whether product managers are effectively engaging with cross-functional partners. These insights help shape more personalized development plans that are specific, actionable, and aligned with the company’s evolving needs.

In a 360-degree review, peers often struggle to articulate feedback beyond personal anecdotes. Worklytics supplements this by providing collaboration analytics that illustrate how individuals interact with teammates. For example, if data shows that an engineer consistently collaborates across multiple teams and contributes heavily to knowledge-sharing, this can validate and strengthen peer feedback.

By embedding Worklytics into the performance review process, organizations move beyond subjective impressions to a data-informed, continuous feedback culture. This not only improves the accuracy and fairness of evaluations but also empowers employees with clearer, more actionable guidance to grow in their roles.